All our servers and company laptops went down at pretty much the same time. Laptops have been bootlooping to blue screen of death. It’s all very exciting, personally, as someone not responsible for fixing it.

Apparently caused by a bad CrowdStrike update.

Edit: now being told we (who almost all generally work from home) need to come into the office Monday as they can only apply the fix in-person. We’ll see if that changes over the weekend…

Reading into the updates some more… I’m starting to think this might just destroy CloudStrike as a company altogether. Between the mountain of lawsuits almost certainly incoming and the total destruction of any public trust in the company, I don’t see how they survive this. Just absolutely catastrophic on all fronts.

If all the computers stuck in boot loop can’t be recovered… yeah, that’s a lot of cost for a lot of businesses. Add to that all the immediate impact of missed flights and who knows what happening at the hospitals. Nightmare scenario if you’re responsible for it.

This sort of thing is exactly why you push updates to groups in stages, not to everything all at once.

Looks like the laptops are able to be recovered with a bit of finagling, so fortunately they haven’t bricked everything.

And yeah staged updates or even just… some testing? Not sure how this one slipped through.

Agreed, this will probably kill them over the next few years unless they can really magic up something.

They probably don’t get sued - their contracts will have indemnity clauses against exactly this kind of thing, so unless they seriously misrepresented what their product does, this probably isn’t a contract breach.

If you are running crowdstrike, it’s probably because you have some regulatory obligations and an auditor to appease - you aren’t going to be able to just turn it off overnight, but I’m sure there are going to be some pretty awkward meetings when it comes to contract renewals in the next year, and I can’t imagine them seeing much growth

Nah. This has happened with every major corporate antivirus product. Multiple times. And the top IT people advising on purchasing decisions know this.

Yep. This is just uninformed people thinking this doesn’t happen. It’s been happening since av was born. It’s not new and this will not kill CS they’re still king.

At my old shop we still had people giving money to checkpoint and splunk, despite numerous problems and a huge cost, because they had favourites.

Don’t most indemnity clauses have exceptions for gross negligence? Pushing out an update this destructive without it getting caught by any quality control checks sure seems grossly negligent.

deleted by creator

explain to the project manager with crayons why you shouldn’t do this

Can’t; the project manager ate all the crayons

Why is it bad to do on a Friday? Based on your last paragraph, I would have thought Friday is probably the best week day to do it.

Most companies, mine included, try to roll out updates during the middle or start of a week. That way if there are issues the full team is available to address them.

deleted by creator

And hence the term read-only Friday.

rolling out an update to production that there was clearly no testing

Or someone selected “env2” instead of “env1” (#cattleNotPets names) and tested in prod by mistake.

Look, it’s a gaffe and someone’s fired. But it doesn’t mean fuck ups are endemic.

Was it not possible for MS to design their safe mode to still “work” when Bitlocker was enabled? Seems strange.

I’m not sure what you’d expect to be able to do in a safe mode with no disk access.

I think you’re on the nose, here. I laughed at the headline, but the more I read the more I see how fucked they are. Airlines. Industrial plants. Fucking governments. This one is big in a way that will likely get used as a case study.

The London Stock Exchange went down. They’re fukd.

Testing in production will do that

Not everyone is fortunate enough to have a seperate testing environment, you know? Manglement has to cut cost somewhere.

Manglement is the good term lmao

Don’t we blame MS at least as much? How does MS let an update like this push through their Windows Update system? How does an application update make the whole OS unable to boot? Blue screens on Windows have been around for decades, why don’t we have a better recovery system?

Crowdstrike runs at ring 0, effectively as part of the kernel. Like a device driver. There are no safeguards at that level. Extreme testing and diligence is required, because these are the consequences for getting it wrong. This is entirely on crowdstrike.

What lawsuits do you think are going to happen?

They can have all the clauses they like but pulling something like this off requires a certain amount of gross negligence that they can almost certainly be held liable for.

Whatever you say my man. It’s not like they go through very specific SLA conversations and negotiations to cover this or anything like that.

I forgot that only people you have agreements with can sue you. This is why Boeing hasn’t been sued once recently for their own criminal negligence.

👌👍

😔💦🦅🥰🥳

Forget lawsuits, they’re going to be in front of congress for this one

For what? At best it would be a hearing on the challenges of national security with industry.

>Make a kernel-level antivirus

>Make it proprietary

>Don’t test updates… for some reason??I mean I know it’s easy to be critical but this was my exact thought, how the hell didn’t they catch this in testing?

I have had numerous managers tell me there was no time for QA in my storied career. Or documentation. Or backups. Or redundancy. And so on.

Move fast and break things! We need things NOW NOW NOW!

Completely justified reaction. A lot of the time tech companies and IT staff get shit for stuff that, in practice, can be really hard to detect before it happens. There are all kinds of issues that can arise in production that you just can’t test for.

But this… This has no justification. A issue this immediate, this widespread, would have instantly been caught with even the most basic of testing. The fact that it wasn’t raises massive questions about the safety and security of Crowdstrike’s internal processes.

From what I’ve heard and to play a devil’s advocate, it coincidented with Microsoft pushing out a security update at basically the same time, that caused the issue. So it’s possible that they didn’t have a way how to test it properly, because they didn’t have the update at hand before it rolled out. So, the fault wasn’t only in a bug in the CS driver, but in the driver interaction with the new win update - which they didn’t have.

You left out > Profit

Oh… Wait…Hang on a sec.

Lots of security systems are kernel level (at least partially) this includes SELinux and AppArmor by the way. It’s a necessity for these things to actually be effective.

never do updates on a Friday.

deleted by creator

Yep, anything done on Friday can enter the world on a Monday.

I don’t really have any plans most weekends, but I sure as shit don’t plan on spending it fixing Friday’s fuckups.

And honestly, anything that can be done Monday is probably better done on Tuesday. Why start off your week by screwing stuff up?

We have a team policy to never do externally facing updates on Fridays, and we generally avoid Mondays as well unless it’s urgent. Here’s roughly what each day is for:

- Monday - urgent patches that were ready on Friday; everyone WFH

- Tuesday - most releases; work in-office

- Wed - fixing stuff we broke on Tuesday/planning the next release; work in-office

- Thu - fixing stuff we broke on Tuesday, closing things out for the week; WFH

- Fri - documentation, reviews, etc; WFH

If things go sideways, we come in on Thu to straighten it out, but that almost never happens.

Actually I was not even joking. I also work in IT and have exactly the same opinion. Friday is for easy stuff!

You posted this 14 hours ago, which would have made it 4:30 am in Austin, Texas where Cloudstrike is based. You may have felt the effect on Friday, but it’s extremely likely that the person who made the change did it late on a Thursday.

Never update unless something is broken.

That’s advice so smart you’re guaranteed to have massive security holes.

This is AV, and even possible that it is part of definitions (for example some windows file deleted as false positive). You update those daily.

Yeah my plans of going to sleep last night were thoroughly dashed as every single windows server across every datacenter I manage between two countries all cried out at the same time lmao

I always wondered who even used windows server given how marginal its marketshare is. Now i know from the news.

Marginal? You must be joking. A vast amount of servers run on Windows Server. Where I work alone we have several hundred and many companies have a similar setup. Statista put the Windows Server OS market share over 70% in 2019. While I find it hard to believe it would be that high, it does clearly indicate it’s most certainly not a marginal percentage.

I’m not getting an account on Statista, and I agree that its marketshare isn’t “marginal” in practice, but something is up with those figures, since overwhelmingly internet hosted services are on top of Linux. Internal servers may be a bit different, but “servers” I’d expect to count internet servers…

Most servers aren’t Internet-facing.

There are a ton of Internet facing servers, vast majority of cloud instances, and every cloud provider except Microsoft (and their in house “windows” for azure hosting is somehow different, though they aren’t public about it).

In terms of on premise servers, I’d even say the HPC groups may outnumber internal windows servers. While relatively fewer instances, they all represent racks and racks of servers, and that market is 100% Linux.

I know a couple of retailers and at least two game studios are keeping at scale windows a thing, but Linux mostly dominates my experience of large scale deployment in on premise scale out.

It just seems like Linux is just so likely for scenarios that also have lots of horizontal scaling, it is hard to imagine that despite that windows still being a majority share of the market when all is said and done, when it’s usually deployed in smaller quantities in any given place.

It’s stated in the synopsis, below where it says you need to pay for the article. Anyway, it might be true as the hosting servers themselves often host up to hundreds of Windows machines. But it really depends on what is measured and the method used, which we don’t know because who the hell has a statista account anyway.

since overwhelmingly internet hosted services are on top of Linux

This is a common misconception. Most internet hosted services are behind a Linux box, but that doesn’t mean those services actually run on Linux.

This is a crowdstrike issue specifically related to the falcon sensor. Happens to affect only windows hosts.

Well, I’ve seen some, but they usually don’t have automatic updates and generally do not have access to the Internet.

It’s only marginal for running custom code. Every large organization has at least a few of them running important out-of-the-box services.

Not too long ago, a lot of Customer Relationship Management (CRM) software ran on MS SQL Server. Businesses made significant investments in software and training, and some of them don’t have the technical, financial, or logistical resources to adapt - momentum keeps them using Windows Server.

For example, small businesses that are physically located in rural areas can’t use cloud based services because rural internet is too slow and unreliable. Its not quite the case that there’s no amount of money you can pay for a good internet connection in rural America, but last time I looked into it, Verizon wanted to charge me $20,000 per mile to run a fiber optic cable from the nearest town to my client’s farm.

Almost everyone, because the Windows server market share isn’t marginal at all.

How many coffee cups have you drank in the last 12 hours?

I work in a data center

I lost count

What was Dracula doing in your data centre?

Because he’s Dracula. He’s twelve million years old.

THE WORMS

I work in a datacenter, but no Windows. I slept so well.

Though a couple years back some ransomware that also impacted Linux ran through, but I got to sleep well because it only bit people with easily guessed root passwords. It bit a lot of other departments at the company though.

This time even the Windows folks were spared, because CrowdStrike wasn’t the solution they infested themselves with (they use other providers, who I fully expect to screw up the same way one day).

There was a point where words lost all meaning and I think my heart was one continuous beat for a good hour.

Did you feel a great disturbance in the force?

Oh yeah I felt a great disturbance (900 alarms) in the force (Opsgenie)

How’s it going, Obi-Wan?

Here’s the fix: (or rather workaround, released by CrowdStrike) 1)Boot to safe mode/recovery 2)Go to C:\Windows\System32\drivers\CrowdStrike 3)Delete the file matching “C-00000291*.sys” 4)Boot the system normally

It’s disappointing that the fix is so easy to perform and yet it’ll almost certainly keep a lot of infrastructure down for hours because a majority of people seem too scared to try to fix anything on their own machine (or aren’t trusted to so they can’t even if they know how)

They also gotta get the fix through a trusted channel and not randomly on the internet. (No offense to the person that gave the info, it’s maybe correct but you never know)

Yeah, and it’s unknown if CS is active after the workaround or not (source: hackernews commentator)

True, but knowing what the fix might be means you can Google it and see what comes back. It was on StackOverflow for example, but at the time of this comment has been taken offline for moderation - whatever that means.

Meh. Even if it bricked crowdstrike instead of helping, you can just restore the file you deleted. A file in that folder can’t brick a windows system.

Yeah and a lot of corpo VPNs are gonna be down from this too.

This sort of fix might not be accessible to a lot of employees who don’t have admin access on their company laptops, and if the laptop can’t be accessed remotely by IT then the options are very limited. Trying to walk a lot of nontechnical users through this over the phone won’t go very well.

Yup, that’s me. We booted into safe mode, tried navigating into the CrowdStrike folder and boom: permission denied.

Half our shit can’t even boot into safe mode because it’s encrypted and we don’t have the keys rofl

If you don’t have the keys, what the hell are you doing? We have bitlocker enabled and we have a way to get the recovery key so it’s not a problem. Just a huge pain in the ass.

I went home lol. Some other poor schmucks are probably gonna reformat the computers.

Might seem easy to someone with a technical background. But the last thing businesses want to be doing is telling average end users to boot into safe mode and start deleting system files.

If that started happening en masse we would quickly end up with far more problems than we started with. Plenty of users would end up deleting system32 entirely or something else equally damaging.

I do IT for some stores. My team lead briefly suggested having store managers try to do this fix. I HARD vetoed that. That’s only going to do more damage.

I wouldn’t fix it if it’s not my responsibly at work. What if I mess up and break things further?

When things go wrong, best to just let people do the emergency process.

I’m on a bridge still while we wait for Bitlocker recovery keys, so we can actually boot into safemode, but the Bitkocker key server is down as well…

Gonna be a nice test of proper backups and disaster recovery protocols for some organisations

Chaos Monkey test

Man, it sure would suck if you could still get to safe mode from pressing f8. Can you imagine how terrible that’d be?

You hold down Shift while restarting or booting and you get a recovery menu. I don’t know why they changed this behaviour.

That was the dumbest thing to learn this morning.

A driver failure, yeesh. It always sucks to deal with it.

Not that easy when it’s a fleet of servers in multiple remote data centers. Lots of IT folks will be spending their weekend sitting in data center cages.

CrowdStrike: It’s Friday, let’s throw it over the wall to production. See you all on Monday!

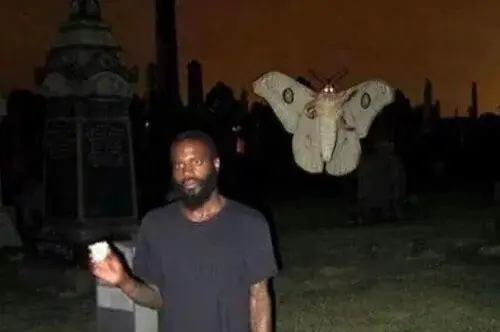

^^so ^^hard ^^picking ^^which ^^meme ^^to ^^use

Good choice, tho. Is the image AI?

It’s a real photograph from this morning.

Not sure, I didn’t make it. Just part of my collection.

Fair enough!

We did it guys! We moved fast AND broke things!

When your push to prod on Friday causes a small but measurable drop in global GDP.

Actually, it may have helped slow climate change a little

The earth is healing 🙏

For part of today

With all the aircraft on the ground, it was probably a noticeable change. Unfortunately, those people are still going to end up flying at some point, so the reduction in CO2 output on Friday will just be made up for over the next few days.

Definitely not small, our website is down so we can’t do any business and we’re a huge company. Multiply that by all the companies that are down, lost time on projects, time to get caught up once it’s fixed, it’ll be a huge number in the end.

GDP is typically stated by the year. One or two days lost, even if it was 100% of the GDP for those days, would still be less than 1% of GDP for the year.

I know people who work at major corporations who said they were down for a bit, it’s pretty huge.

Does your web server run windows? Or is it dependent on some systems that run Windows? I would hope nobody’s actually running a web server on Windows these days.

I have a absolutely no idea. Not my area of expertise.

They did it on Thursday. All of SFO was BSODed for me when I got off a plane at SFO Thursday night.

Was it actually pushed on Friday, or was it a Thursday night (US central / pacific time) push? The fact that this comment is from 9 hours ago suggests that the problem existed by the time work started on Friday, so I wouldn’t count it as a Friday push. (Still, too many pushes happen at a time that’s still technically Thursday on the US west coast, but is already mid-day Friday in Asia).

I’m in Australia so def Friday. Fu crowdstrike.

Seems like you should be more mad at the International Date Line.

This is going to be a Big Deal for a whole lot of people. I don’t know all the companies and industries that use Crowdstrike but I might guess it will result in airline delays, banking outages, and hospital computer systems failing. Hopefully nobody gets hurt because of it.

Big chunk of New Zealands banks apparently run it, cos 3 of the big ones can’t do credit card transactions right now

It was mayhem at PakNSave a bit ago.

cos 3 of the big ones can’t do credit card transactions right now

Bitcoin still up and running perhaps people can use that

Bitcoin Cash maybe. Didn’t they bork Bitcoin (Core) so you have to wait for confirmations in the next block?

Several 911 systems were affected or completely down too

Ironic. They did what they are there to protect against. Fucking up everyone’s shit

Maybe centralizing everything onto one company’s shoulders wasn’t such a great idea after all…

Wait, monopolies are bad? This is the first I’ve ever heard of this concept. So much so that I actually coined the term “monopoly” just now to describe it.

Someone should invent a game, that while playing demonstrates how much monopolies suck for everyone involved (except the monopolist)

And make it so you lose friends and family over the course of the 4+ hour game. Also make a thimble to fight over, that would be dope.

Get your filthy fucking paws off my thimble!

I’m sure a game that’s so on the nose with its message could never become a commercialised marketing gimmick that perversely promotes existing monopolies. Capitalists wouldn’t dare.

Crowdstrike is not a monopoly. The problem here was having a single point of failure, using a piece of software that can access the kernel and autoupdate running on every machine in the organization.

At the very least, you should stagger updates. Any change done to a business critical server should be validated first. Automatic updates are a bad idea.

Obviously, crowdstrike messed up, but so did IT departments in every organization that allowed this to happen.

Monopolies aren’t absolute, ever, but having nearly 25% market share is a problem, and is a sign of an oligopoly. Crowdstrike has outsized power and has posted article after article boasting of its dominant market position for many years running.

I think monopoly-like conditions have become so normalised that people don’t even recognise them for what they are.

I mean, I’m sure those companies that have them don’t think so—when they aren’t the cause of muti-industry collapses.

Yes, it’s almost as if there should be laws to prevent that sort of thing. Hmm

Well now that I’ve invented the concept for the first time, we should invent laws about it. We’ll get in early, develop a monopoly on monopoly legislation and steer it so it benefits us.

Wow, monopolies rule!

The too big to fail philosophy at its finest.

CrowdStrike has a new meaning… literally Crowd Strike.

They virtually blew up airports

Wow, I didn’t realize CrowdStrike was widespread enough to be a single point of failure for so much infrastructure. Lot of airports and hospitals offline.

The Federal Aviation Administration (FAA) imposed the global ground stop for airlines including United, Delta, American, and Frontier.

Flights grounded in the US.

deleted by creator

An offline server is a secure server!

Honestly my philosophy these days, when it comes to anything proprietary. They just can’t keep their grubby little fingers off of working software.

At least this time it was an accident.

There is nothing unsafer than local networks.

AV/XDR is not optional even in offline networks. If you don’t have visibility on your network, you are totally screwed.

Clownstrike

Crowdshite haha gotem

CrowdCollapse

https://www.theregister.com/ has a series of articles on what’s going on technically.

Latest advice…

There is a faulty channel file, so not quite an update. There is a workaround…

-

Boot Windows into Safe Mode or WRE.

-

Go to C:\Windows\System32\drivers\CrowdStrike

-

Locate and delete file matching “C-00000291*.sys”

-

Boot normally.

-

The thought of a local computer being unable to boot because some remote server somewhere is unavailable makes me laugh and sad at the same time.

I don’t think that’s what’s happening here. As far as I know it’s an issue with a driver installed on the computers, not with anything trying to reach out to an external server. If that were the case you’d expect it to fail to boot any time you don’t have an Internet connection.

Windows is bad but it’s not that bad yet.

It’s just a fun coincidence that the azure outage was around the same time.

Yep, and it’s harder to fix Windows VMs in Azure that are effected because you can’t boot them into safe mode the same way you can with a physical machine.

Foof. Nightmare fuel.

expect it to fail to boot any time you don’t have an Internet connection.

So, like the UbiSoft umbilical but for OSes.

Edit: name of publisher not developer.

Yep, this is the stupid timeline. Y2K happening to to the nuances of calendar systems might have sounded dumb at the time, but it doesn’t now. Y2K happening because of some unknown contractor’s YOLO Friday update definitely is.